Content moderation involves creating rules to ensure social media communities offer accurate information in a respectful environment. While that might sound like a fairly straightforward practice, it’s far from it.

Simply put, some people spew false or hateful messages on social media, and it’s not easy to regulate. There’s a lot of debate about who’s responsible for moderating toxic content, so much so that it has brands making changes to their advertising budgets and implementing their own social media guidelines.

Content moderation will be an important topic this year. Here’s what you should know:

Content moderation purpose and issues

Social media platforms typically have some type of content moderation policies that review, flag, and remove content that includes misinformation, hate speech, or creates safety problems, but they’re not perfect.

Most platforms use machine learning and algorithms to automate the moderation process, but sometimes it misses harmful posts or flags posts that are harmless.

Each platform has its own guidelines, so there’s no consistency between platforms. A post that gets flagged on Facebook might not be flagged on Twitter, especially since Twitter’s new owner, Elon Musk, made drastic cuts to its content moderation team and reinstated accounts that were previously banned.

How content is moderated has become such a hot topic it has reached the Supreme Court. SCOTUS will hear two cases this year that will explore the responsibility social media platforms have in content moderation.

Brands pull back ad spend

Flawed content moderation also creates an advertising problem for brands. If content isn’t moderated properly, a brand’s paid ad could be placed alongside inaccurate or unsavory content. For example, big-name brands have pulled ads from YouTube after their ads ran alongside extremist videos, including ISIS content.

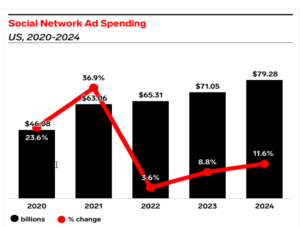

While content moderation issues have existed for years, Insider Intelligence believes it’s contributing to a slowdown in ad spend. Take a look at the chart below. From 2020 to 2021, there was a 37% increase in the amount of money brands spent on social ads, but between 2022 and 2024, ad spending is expected to increase by just three of four percent each year.

Content moderation, or lack thereof, likely has brands pulling back to keep their brand from being associated with distasteful content, says Insider Intelligence.

Tips for brands to create their own moderation rules

Since moderation is a bit like the Wild West, brands shouldn’t rely on social media platforms to govern content. Instead, it’s best practice for brands to have their own guidelines.

If your customers submit UGC, comment on posts, or engage with the brand on social, what’s expected of them? What’s allowed, and what crosses a line? To help create your own set of content moderation guidelines, consider these tips:

Know what you can and can’t moderate

The First Amendment protects free speech, so review the rules and know when and how you can legally take action.

Create and share a set of guidelines

You need to create a written set of guidelines that your company will follow. These guidelines will apply to all content and should be publicly shared on your website, so everyone understands what is and isn’t okay to say or create.

Your content moderation policy should include:

- Purpose of the community

- A list of desired behaviors

- A list of behaviors that are not tolerated, be explicit

- Consequences of breaking the rules

- Consequences might include editing or removing content or banning members

- Who addresses problems and has final say

Don’t remove every negative comment

Content moderation isn’t meant to scrub your social media or website of every poor review. You can take moderation too far, which can harm your brand. Rather than deleting poor reviews, respond thoughtfully. If there was a problem, apologize and offer to speak to the customer in private.

Focus moderation on content that pedals misinformation including hate speech, infringes on public safety or uses obscenities.

Put a person in charge

It’s not enough to create the guidelines and share them with your audience; you need to enforce the rules. Put someone in charge of moderation and make sure there’s a plan in place to review content.

There are two ways to monitor content, either by manually reading posts or by implementing a tool to do it for you. If you have a fairly small social audience, manual reviews will likely work. If you have a larger, engaged audience, you can create a content team that focuses on moderation and implement a moderation tool like Resondology or Besedo that detects and removes toxic content.

Even with a tool in place, someone should still check on its effectiveness.

This year, your brand will likely discuss moderation and how it impacts your marketing efforts. It’s important to have open conversations about it and make a plan that caters to your brand’s needs.